AR’s iPhone Moment: OpenAI and Jony Ive to the Rescue

Why OpenAI can and will build category defining AR glasses

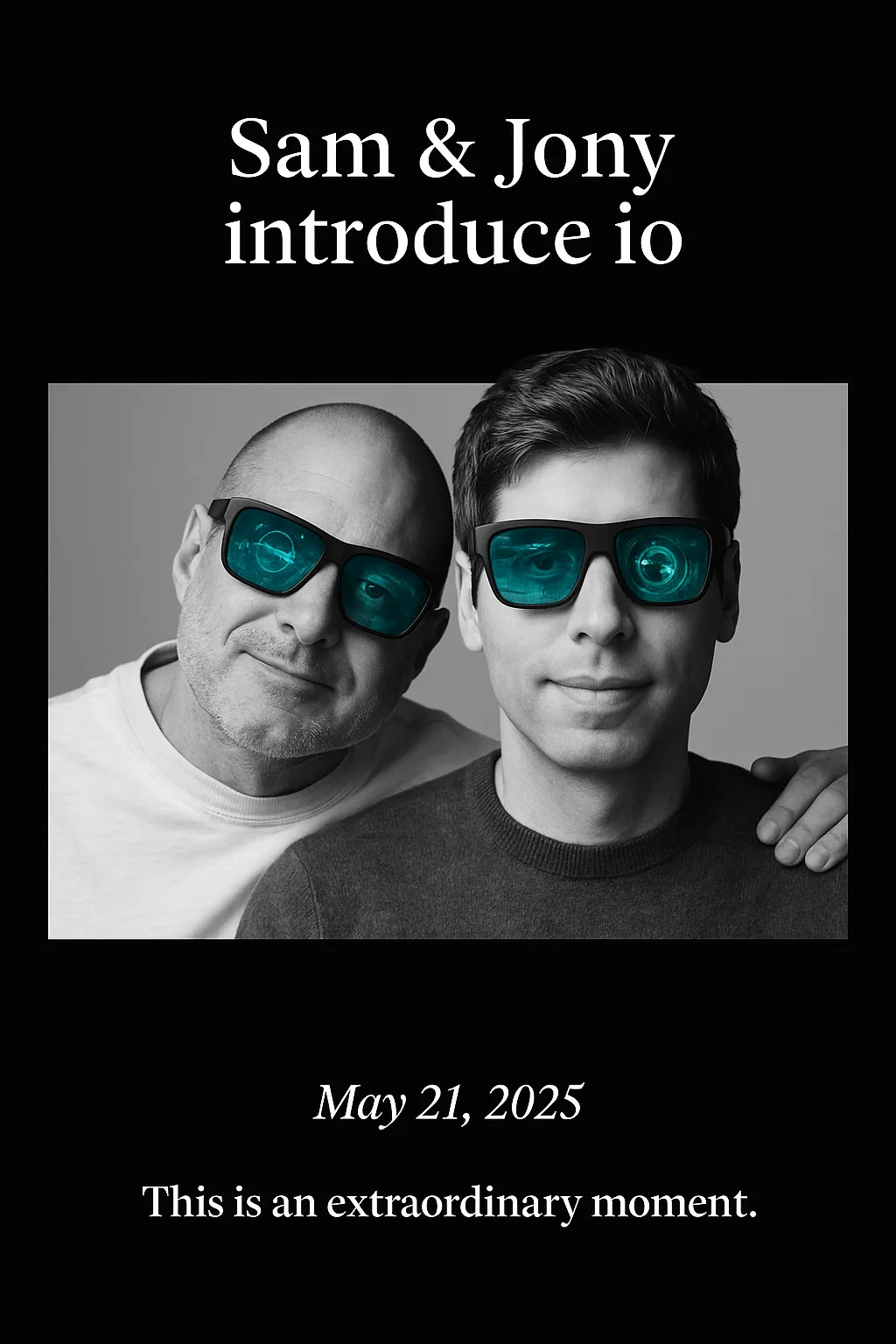

The AI juggernaut and the Michael Jordan of product design are tying the knot.

This feels like history in the making. And if you're a spatial computing enthusiast (AR/VR/XR) , it also feels like hope…

In case you haven't heard the news: OpenAI just acquired Jony Ives AI hardware startup, ‘io’ for $6.5B+.

The internet is now a buzz with speculation. Everyone is asking the same question — what exactly are they going to build?

My bet?

OpenAI is going to (eventually) build category defining AR glasses.

And in pairing with Jony Ive, they're not just trying to compete… they're trying to change the world.

This could yield the "iPhone moment" for AR that many of us have been waiting for, but with the AI-first twist this form factor can't live without.

The thesis is clear: AI needs a better way to understand and interact with our world, to break free from the confines of the 2D screen. It needs eyes, it needs context, and it needs to be with us, not just on a device.

And while that exact nature of that device remains shrouded in mystery, one thing is certain: when you fuse the world’s most potent AI brain with the planet’s most revered design sensibility, you’re not aiming to create a slightly more elegant chatbot interface or a me-too hardware product (e.g. speakers, earbuds, phone, etc).

You’re aiming for a revolution.

What could be more worthy of such a leap?

AR glasses will be a full circle evolution in human computer interaction, surfacing information in the way our brains have evolved to interpret the world. They’ll also bring our eyes back up to the world and to each other.

Imagine… a ‘generative UI’ that doesn’t just overlay information, it injects your perceptual system with maximum agency; an AI that moves beyond mere information retrieval to become an intuitive guide, an amplifier of your own curiosity and creativity.

The ultimate result? Machines that conform to us, rather than us conforming to them (hunched over, distracted, restless, addicted).

This is the vision that’s kept me in the industry for all these years, despite all the ups and (mostly) downs.

The implications for how we learn, work, create, and connect are profound and hard to imagine, especially if you’ve never tried really, really good augmented reality. I’d reckon less than a thousand people have. On the entire planet.

As for our wider industry, this should be a wake up call. Apple, Meta, Google, the whole AR/VR/XR/Spatial Computing alphabet soup – it’s time to kick things into gear and up our game.

And if you’re just a passive observer…. get your popcorn out. Because the AR + AI race just got a whole lot more interesting.

Okay… Easy there cowboy

I know what you're thinking. Pump the brakes, Evan, you're way over your skis.

There's zero guarantee OpenAI will get into AR. In fact, Sam Altman just told the WSJ they aren't making glasses.

What we do know is that Sam has a prototype, and he’s told OpenA staff it’s “the coolest piece of technology that the world will have ever seen.”

So far, the rumor mill points to some sort of little puck-like speaker thing that you can put on your desk, in your pocket, around your neck, etc.

Something like this…

Call me crazy, but this (or anything remotely close) would be far from “the coolest technology we’ve ever seen”.

I’m not buying it. I stand by my thesis, and here's why.

(1) AR Patents: First, OpenAI has filed numerous patents for AR.

Sam’s also one sly cat, and one of the last people I’d want to face at the poker table… Do you really think he’s going to show all his cards?

I could end my case here but let's keep going.

(2) A family of connected devices: Both Sam and Ive said they're going to make a family of devices.

Jony Ive has also suggested he's on a mission to rid the world of screens. Most notably, during this interview with Patrick Collison at the Stripe conference. It's an incredible conversation and will give you an inside glimpse into his motives. You can hint feelings of remorse for what the screen/iPhone has done to the world, reducing out attention span, creating all new forms of addiction, inflaming our insecurities, the list goes on...

He then said in the announcement video,"people have an appetite for something new, which is a reflection on a sort of unease with where we currently are..."

Now, I completely agree with getting rid of screens. But are we really going to get rid of the visual elements of computing?

(3) Seeing is believing: We are visual, experiential creatures. We need to see it to believe it. We need to see the inside our AirBNB, to fully inspect our new car, and to watch (360) videos from our loved ones.

I just don't see a world in which Ive doesn’t embrace our visual nature, and doesn’t embrace the world as our new desktop background.

What are we gonna do insteead… carry a puck/little box with a projector, point it at a bevy of surfaces, and hopes it pans out?

Me thinks not...

4/ AR = Ultimate Fun & Delight: In that same video, Ive talks about his design thesis and the ethos that drives him.

He believes product design should be all about eliciting delight, and creating more pure joy and fun in the world....

Again, most people haven't seen what truly good AR looks. I have, and I cried.

It was from trying an actual demo of this experience below, which we built with Nike back in my days at (OG) Meta:

This experience is what drives me to this day, and I’m telling you… it was the pinnacle of delight (within the realms of creativity & productivity).

The Strategy

I could keep going with my speculations, but I'll stop there for now.

Equally as important to what they build, is the how & why... aka their strategy. After all, what they build won't matter if they can't compete.

So, given who they're up against (Apple, Google, Meta), you have to ask the question... is this even the right strategy? Or is it a distraction?

Because in many ways... they're putting themselves into a big tech quantum state of superposition, trying to be a bit like Apple, a bit like Google, and everything else in between.

On one hand, you could argue OpenAI needs to focus on supplanting Google, and take a similar tack: building the OS/software/killer app (e.g. search) and have their apps on every device, in every ecosystem, in order to maximize scale.

And when Sam founded the company, it indeed was in direct opposition to Google. his catalyzing thesis was that Google was poised to run away with the AI race, and someone needed to compete.

But on the other hand... competing head on with Google is terrifying, especially now (did you see the last Google I/O? Mind blowing). Red ocean blood baths abound.

Now Apple? A different story. They're limping out of the gates in the AI race. Heck, you could argue they're still at the starting line. Their effort to date has been nothing short of disastrous, mired in politics and bureaucracy (as this recent Bloomberg article confirms).

In tandem, the iPhone hasn't meaningfully changed in generations, the Apple Vision Pro is a public R&D project (which I fully endorse, for the record), and developers continue to sour on Apple's App Store philosophy and mechanics.

In other words… Apple has cracks in its armor. Google is getting its mojo back. So going after Apple actually makes sense. And since we're obviously living in a simulation, OpenAI now has who Steve Jobs called his 'spiritual partner', making the onslaught now all the more fun and dramatic.

Regardless, things just got much, much more interesting.

Call it speculation, call it wishful thinking, call it hopium: AR glasses are coming at some point.

It might not be the first device, in fact, considering the technical hurdles that remain for AR, it almost certainly won't. But I'm betting you my bottom dollar: its on the mind, its on the roadmap, and its coming.

As such, I'm updating our AR + AI Racecard with OpenAI and Google.

You'll find below my 'pillar-based' analysis below.

For a similar analysis on Meta & Apple, check out my last article: The AR + AI Race Non-finale

With that, get your popcorn ready. The outcomes are uncertain, but the drama is sure to be first rate.

Oh, and if you're so compelled, tell me your thoughts in the comments below!

Who do you think is going to win? Where are the gaps in my thinking?

The AR + AI Racecard

The Contenders

Apple: The incumbent king of premium hardware, software integration, and a fiercely (but increasingly less) loyal ecosystem.

Meta: The relentless metaverse visionary, betting big on social VR/AR and open ecosystem development

OpenAI-IO: The AI-native, design-led disruptor, poised to redefine AI-human interaction.

Google: The re-awakened giant of AI and information, with deep pockets, vast data, and significant (if sometimes fragmented) AR/AI initiatives (think Project Astra, ARCore, Lens, etc.).

We analyzed Apple & Meta in our last essay (pasted further below). So let’s now breakdown our new entrants across the same critical battlefronts:

Software: AI Agents Will Redefine the OS

OpenAI-IO

Forget app grids and traditional UI/UX. If my hunch is right, OpenAI-IO isn’t just building AR glasses; they're crafting the native interface for AGI.

This means an OS designed from scratch around AI agents and a truly generative UI. Think less about swiping and tapping, and more about natural language interaction, with the visual interface materializing contextually, precisely when and how you need it.

Why browse for an app when an AI agent can anticipate your need or summon the exact information/tool in a visual whisper? This is the ultimate "clean slate" advantage – no iOS or Android legacy to protect, just a relentless pursuit of the most intuitive AI-human interface. The "OS" becomes the AI itself, orchestrating experiences

Google has been telegraphing its AI agent ambitions for years (think Assistant, Lens, and the recent Project Astra demo).

Their XR software play will undoubtedly leverage this deep expertise. Expect an Android-derived (or perhaps a new, lightweight, AI-centric) OS where AI agents are first-class citizens, deeply integrated with Google’s vast knowledge graph and services (Maps, Search, Workspace).

The challenge for Google? Making it feel like a cohesive, new paradigm, not just Android shoehorned into glasses, and navigating the innovator's dilemma of how this new OS interacts with their existing Android dominance. But an AI that can "see what you see" and proactively offer assistance through AR? That’s Google’s sweet spot.

Innovator’s Dilemma: Who’s Free to Disrupt?

OpenAI-IO:

This is where OpenAI-IO shines. They have zero existing hardware cash cows to protect. No iPhone sales to cannibalize, no dominant mobile OS to tiptoe around.

They can be ruthless in their pursuit of the optimal AI-first AR experience, even if it upends current computing paradigms.

This freedom is a massive competitive advantage. Their only "legacy" is cutting-edge AI, and these AR glasses are being built to serve that master.

Google faces a more complex path. While not as hardware-centric as Apple, their Android ecosystem is vast, and their primary revenue driver is advertising, fueled by data from services like Search.

How does a deeply personal, always-on AR device fit into this? Will they create a truly open spatial OS that might compete with Android partners, or a more controlled experience to safeguard their service ecosystem?

Their history shows a willingness to experiment (and sometimes abandon), but a full-scale AR push will require navigating these internal tensions. How do you truly innovate when you are the current paradigm in so many ways?

AI: The Engine of Spatial Intelligence

OpenAI-IO:

OpenAI isn't just using AI; they are defining the frontiers of AGI. Their AR glasses won't just have AI features; they will be an AI, an embodied intelligence.

Expect foundational models running (perhaps with clever on-device/cloud hybrid approaches) that offer unparalleled reasoning, personalization, and generative capabilities directly within the user's perception. The world itself becomes the prompt.

Google’s strategy? Unmatched data, powerful models, pervasive AI

Google's AI prowess is legendary, fueled by unimaginable datasets from Search, YouTube, Maps, etc., and world-class research from Google AI and DeepMind.

Their strength lies in contextual understanding, information retrieval, and services like real-time translation or visual search (Lens). For Google XR, AI will be about delivering Google's information and services in a more ambient, spatially aware manner. The key will be harnessing this power into a cohesive, delightful user experience in AR without feeling intrusive.

Hardware: Design Ethos and Go-to-Market Approach

OpenAI-IO:

With Jony Ive at the design helm, expect nothing less than exquisitely crafted hardware that prioritizes user experience, elegance, and perhaps a minimalist aesthetic.

The focus will likely be on a premium, aspirational device where the technology feels almost invisible, seamlessly blending with the user.

The challenge? Manufacturing at scale and hitting a price point that, while likely premium, doesn't relegate it to a Veblen good. But Ive's involvement practically guarantees the product will be desirable.

Google’s hardware play will be more open and partner-centric

Google's hardware history with XR is... varied (RIP Daydream, ahem, Glass). They might not aim to be the sole, vertically integrated hardware provider like Apple or potentially OpenAI-IO.

Instead, we might see them focus on creating a core AI-XR software platform and reference designs, empowering a broader ecosystem of hardware partners (think their Pixel strategy, but for AR, or their renewed partnership with Samsung).

This approach accelerates reach but risks fragmentation and inconsistent user experiences. Their "secret sauce" will be the AI and services powering these diverse endpoints.

Founder Vision & Leadership:

OpenAI-IO:

Sam Altman is driven by a singular, audacious goal: achieving AGI.

Jony Ive is driven by an obsessive pursuit of perfect design and user experience.

This combination is electric. Altman isn't playing for incremental gains; he’s playing for a paradigm shift. Ive ensures that shift is human-centric. This is conviction-driven leadership at its finest, focused on building something genuinely new.

Sundar Pichai has clearly stated that AI is the core of Google's future, infusing every product and service. Google XR will be a key expression of this.

While perhaps not with the same singular, public-facing "bet-the-company" XR fervor as Zuck, the leadership commitment to AI as the enabler of new experiences is undeniable.

The question is whether XR hardware itself becomes a top-tier strategic priority or remains primarily a vehicle for their AI services.

Philosophy: Open vs. Closed Systems

OpenAI-IO:

This is an unknown. Will OpenAI opt for a more controlled experience, or will they finally live up to their name with a more ‘Open’ platform?

Given the tight integration of AI and the Jony Ive design ethos, the initial hardware/software experience will likely be relatively controlled to ensure quality and coherence.

However, OpenAI's broader strategy involves making its AI models available through APIs. We might see a future where the glasses are a premium conduit to an increasingly open AI platform, allowing developers to build "skills" or "agents" for the AR experience.

Google will likely continue the Android legacy – Openness with guardrails

Google’s DNA is rooted in Android’s openness, which spurred massive ecosystem growth. They’ll likely aim for a similar model in XR to encourage developer adoption and hardware diversity.

However, access to Google's core AI services and data will undoubtedly come with Google's terms, creating a "semi-open" environment.

The challenge is fostering true innovation while maintaining the quality and integrity of the user experience tied to their services.

Developer Trust & Traction:

OpenAI-IO:

If OpenAI-IO delivers on the promise of AR glasses as a direct interface to their cutting-edge AI models, developers will flock.

The opportunity to create applications powered by AGI-level intelligence in a spatial context is a greenfield of immense proportions.

Trust will be built on the power and accessibility of their AI tools and a clear value proposition for developers.

Google will be aiming to leveraging the existing Android Army, while principally rebuilding the XR Faith

The existing Android developer base is massive. The key is providing them with compelling tools, consistent platform support (their XR efforts have been a bit start-stop), and clear monetization paths to translate their mobile success to spatial. Project Astra and advances in their AI services are powerful carrots.

Fashion & Culture

OpenAI-IO

Jony Ive isn't just a designer; he's a cultural icon. His involvement alone lends AR a level of design credibility and "cool factor" it has struggled to achieve.

If anyone can make face computers fashionable and desirable, it's Ive. This could significantly shift public perception.

Google certainly has tougher path here due to the cultural missteps of Google Glass (aka the Glass Hole legacy).

They’ll need to lean heavily on sleek partner designs (if they go that route) or develop an incredibly compelling aesthetic internally, perhaps focusing on minimalist, almost invisible tech, to win hearts and minds from a fashion perspective. Their focus will likely be more on utility first, fashion second.

Product Iteration & Consumer Comfort

OpenAI-IO

Ive is known for striving for a perfectly polished V1.

OpenAI, however, comes from a research background where rapid iteration is key. Finding the balance will be crucial.

The AI will evolve constantly, but the hardware will need to feel "right" from day one to gain consumer trust.

Google is comfortable launching products in beta and iterating publicly based on user feedback.

This can lead to faster learning but also a perception of products being perpetually unfinished.

For AR, where comfort and reliability are paramount, they'll need to ensure a high-quality baseline experience.

Distribution

OpenAI-io

Given the likely premium nature of an Ive-designed product and OpenAI's current standing, a direct-to-consumer model, perhaps with select high-end retail partnerships, seems probable for initial launch.

Scaled distribution will be a new muscle for OpenAI to build.

If Google focuses on a platform/software approach with hardware partners, their distribution is instantly global through the existing Android device ecosystem and retail channels.

If they launch their own "Pixel-for-AR" device, distribution will be more controlled but can leverage their existing hardware channels.

Product Portfolio: The Ecosystem Driving AR Use Cases

OpenAI-IO:

OpenAI's "portfolio" is its suite of world-leading AI models.

The AR glasses become the prime delivery mechanism for these models to provide value across countless applications – from hyper-intelligent assistants to creative tools to powerful analytical interfaces.

The use cases will be defined by what their AI can do spatially.

Google's immense portfolio – Search, Maps, YouTube, Workspace, Assistant, Translate – provides an almost endless list of powerful services that can be integrated into an AR experience.

The AR glasses become a new, more contextual way to access and interact with the Google ecosystem you already use.

Initial Readout (Not a final verdict, just a summary)

OpenAI-IO

Poised to deliver massive disruption by redefining the experience of AR through an AI-first, design-led approach.

Their path is to create something so compelling and intelligent it carves out a new premium category.

Their biggest challenge: scaling hardware production and distribution from a (relative) standing start.

Has all the ingredients for a dominant AR play – AI, data, an existing OS footprint, and vast services.

Their path is likely to make AR an ambient extension of the Google services ecosystem.

Their biggest challenge: a historically fragmented XR hardware strategy and the innovator's dilemma of how AR fits with their existing Android dominance.

This sets the stage for a truly fascinating four-way race.

Each player brings unique strengths and philosophies to the table. The coming years won't just be about who builds the best device, but who crafts the most compelling, useful, and ultimately indispensable experience at the intersection of AI and our perceived reality.

If you’d like to dive into a similar analysis on Meta and Apple, check out my last article here: The AR + AI Race Non-finale.