Breaking Through the Screen, Part I: A paradigm shift in human-computer interaction

Our looming augmented future

(Originally published on Junary 11, 2017)

As I approach my one year mark working in augmented reality (1/11/2017), I officially have no doubt: one day, augmented reality is going to dramatically change our world.

One day.

This is the first of a series of articles that will touch on the promise of AR, discuss how it will converge with other fringe technologies, and provide a layman’s overview of the hurdles that must be cleared for that fateful day to come.

Call it augmented reality, mixed reality, holograms, digital overlays, or whatever floats your fancy (the variety of names for this stuff has become dizzying. I’m now hearing about something called “XR?!” Stop it people!). When you analyze the potential use cases for AR (the term Meta is settling on), it is hard to argue against the value of bringing the virtual world into the physical. Just name one function within the realm of buying, selling, creating, playing, communicating, traveling, healing, (heck even flirting one day…see short film “Sight” by our very own Robot Geniuses. Good stuff.), the list goes on. AR can and will play a significant role. And to those who say you’ll never wear a pair of glasses to do these things, just look at our behavior with screens today. In the early smartphone days, very few predicted that these devices would have such a radical impact on human behavior. It’s the first thing we touch in the morning, the last thing we touch before bed, and we’re now at a point where getting through the day without this device is a crippling thought. These things are practically glued to our faces as it is, and I’m one of the worst offenders out there. After dropping my phone off at the Apple store I’m now dealing with the monumental challenge of organizing dinner plans with friends sans a screen. Faced with the logistical nightmare that is this temporary existence without Uber, iMessage, Google Maps, and Yelp, I’ve crumbled. Sorry guys, not going to make it this time around. Enjoy the vegan sushi… (okay, as a sashimi guy, a bit of a relief)

As our dependence on these devices continues to increase, what we are seeing is the formation of, and increasing dependence on, a vast and vibrant digital world. If you think about it, everything that has defined our existence in the analog world, our identity, our relationships, our work, our knowledge, our entertainment, you name it, is now connected, streamed, updated, and shared within a virtual world. And for those things that remain very much analog, critical aspects of it are beginning to exist digitally as well. Take your car for example. It’s becoming software on wheels. Or how about your home? You can monitor and control it on our phone, or even go as far as renting it out on the digital marketplace that is AirBnB.

Within the world of the enterprise, this concept of a simultaneous existence of “things” virtually and physically has been around for a while. It is known as the “digital twin”, or sometimes referred to as the “digital tapestry” (will cover this topic in a later post). Well, thanks to the internet and ubiquity of sensors today, almost every “thing” now has a “digital twin”, if you will. These “things” will embody this co-existence, existing in a sense virtually and physically, and all connected in a myriad of ways. The outcome at maturity is something we’ve yet to fully comprehend.

What I’ve described is something many of you are likely familiar with by now. It’s Silicon Valley’s favorite buzz word, the “Internet of Things”. Or really, the internet of “everything”. And while the phrase has been beaten into a pulp, it’s real, and it’s happening all around us at an exciting pace.

According to Kevin Kelly (co-founder of Wired and author of The Inevitable), there were five quintillion chips embedded into objects other than computers in 2015. Don’t bother counting all those zeros. That’s 10 raised to the power of 18.

Now as these objects become embedded with these sensors and chips, they are also all being connected and networked within Silicon Valley’s (arguably) second favorite buzz word, “the cloud”, and then “cognified” with artificial intelligence (on a buzz word tear here…).

Cisco, a power house sitting at the center of all this connectivity, predicts that by 2020, there will be 50 billion connected things in the cloud. That 50 billion only represents around 4% of all the “things” in the world…

So just sit back, and let your imagination run. You think we’re dependent on this digital realm today? Just imagine what our world will look like 20 years from now. Some call it scary, others find it exciting. But what I think beckons the fear is the current state of humanity and technology today, primarily, the way with which we interface with this world. Primarily, through screens.

We’re obsessed with our screens. We’ve all seen it by now- humans in a zombie like state, tip tapping away on their smartphones, regardless of the absurdity of the context (the bar, driving, crossing the street…). These screens represent a barrier, a wall of sorts keeping us separated not just from this digital realm, but often times separated from the people and the world around us. We have to invent games at the dinner table these days to prevent people from habitually reaching for their phone. Facing an awkward silence? Phone!

It acts as a safety blanket, our brains go to source for a serotonin/dopamine cocktail, a technological drug. We’re officially addicted, and in many ways, screens are making us less human. But I think there’s a way to breathe some humanity back into these technologies, and spin this addiction for good…

Now, you may have seen reports from the likes of Goldman Sachs and DigiCapital, predicting the AR/VR market to be upwards of $120 billion by 2020. Of that pie, they say AR will be $90 billion. This large disparity is due to the fact that AR is to going break down that wall that is our screen, bringing the digital world into our actual reality, allowing us to interact with digital information in a way that is far more human, far more productive, and far more collaborative. This will connect us deeper to our work, to each other, and ultimately, to ourselves in the form of heightened creativity, insight, and overall performance (we’ll unpack exactly how down the road here).

This shift towards AR, or what industry snobs like me like to call spatial computing, will happen first within the general place of work. The office of the designer, the engineer, the architect, the surgeon, the list goes on. And as the tech improves, it will eventually creep its way out into consumerville, where it’s already making its mark via Snap, PokemonGo, and the likes.

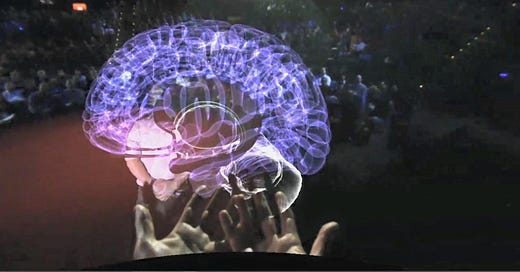

At the technology’s peak, the sensors on your AR device will come together to essentially act as a third eye, powered by all of the data, connections, and AI in the aforementioned cloud, a cloud that will eventually become a hive mind of all the world’s knowledge. These sensors will be able to see, analyze, and comprehend the world in a way that humans never could. In turn, the optics will inject your perceptual system with a level of agency that is currently unimaginable, by creating a contextually relevant, digital layer on top of the world that you will be able to turn on and off based upon where you are, who you are with, and what you are doing.

In other words, AR is going to make us superhuman. In its perfect form, it won’t be a technology that we “interface” with in a traditional sense. Rather, it’s a technology that will interface with our consciousness in the way that our consciousness experiences the world, or in other words, with raw human sensory information. That’s a pretty trippy thought, I know, but if you think about every other medium we consume, or form of media, it’s typically an externalized version of an event. Be it someone else’s app, writing, film, or website, it’s an externalized medium of someone else’s consciousness. How you interpret it may be subjective, but the content in its essence is entirely objective and created within the mind of another. AR could flip this notion on its head. This is when brain computer interfaces (BCI) come into play, essentially tapping into and controlling a computer with your mind… That’s a fun one we’ll dive into later (see the startup Kernel to satiate your appetite until then).

Avoiding that rabbit hole and coming back to earth here… the point I am trying to make is that Goldman’s $90 billion projection is just the first hint at this sci-fi trajectory. Talk about some serious hype! And you’re right. Right now, it’s just that. Hype. So what has to happen for us to reach this converged world? Well, there are some significant technological advancements that need to be made in order to get the form factor down, boost the computing power and battery life requirements, and increase data transfer speeds. Achieving these is inevitable. We’re already well on our way. What’s less of a guarantee is how the overall user experience is designed. This will be key to adoption.

In my next post, I’ll address at a high level some of these necessary tech advancements. I’ll also touch upon how the designers of tomorrow’s UI/UX will need to have a deep understanding of how the human mind already works today in order create a compelling AR experience.

Evan, referencing “That 50 billion only represents around 4% of all the “things” in the world…” - does the other 96% represent basically anything powered by electricity, or the potential “things” that could be connected to IoT, or are the the same thing? Fun reading.