Breaking Through the Screen, Part III: Technical hurdles

The technical challenges holding back AR/VR

(Originally published on May 29th, 2017)

The augmented reality train is picking up serious steam, as the technology is officially beginning to drive corporate strategy within many of the world’s largest and most transformational companies.

In presenting at F8, Zuckerburg anointed AR as a core technology on Facebook’s roadmap, going after the camera as a platform powered by AR.

Naturally, this is precisely Snap’s current strategy. Why else would Facebook be doing it?

And of course, it wouldn’t be a Silicon Valley trend if Google didn’t have its paw in the honey pot. We saw a big announcement of a standalone VR headset with inside out positional tracking at their recent IO conference. Why does that matter for AR? Because the tracking technology that powers this headset, Tango, is an essential component of a quality AR experience (more on this tech later). It makes perfect sense that they would make a run at dominating this space. Think about Google’s raison d’etre, and then think about the long term promise of AR- putting the right digital information in front of you, at the right time, and the right place.

Ah, and then there’s everyone’s favorite sleeping giant (or so they want you to think), Apple. Waiting in the wings and watching as the industry, rife with a gold rush like fever, burns through billions in VC cash, gets it all wrong, and then gets stomped on by superior UI/UX, and superior timing. Classic.

This assumes history repeats itself, a la the smart phone era, but many signs point to Apple doing just what Apple does best- being deadly sneaky and brilliantly patient.

Tim Cook continues to drop, not-so-subtle, hints about the importance of AR in Apple’s future. However, on the more subtle side, there is an extremely covert and distinguished team of former Microsoft Hololens leaders, Hollywood and video game 3D mavens (the true experts in designing virtual worlds), and computer vision rock stars (see Mateo acquisition, leading computer vision AR platform), all cooking up something revolutionary, no doubt. Did I mention covert? These guys are like SEALS trained for a Black Ops Mission. Ask them what they do for a living, and they’ll do whatever it takes to avoid the question. Lie, divert your attention, repel out of the nearby window and flee into the night. Really makes you wonder what kind of contractual threats Apple has in those employment agreements…

Oh, and at Microsoft? HoloLens. Windows Holographic. Not much else to say.

These are the companies that have and will continue to fundamentally change what it means to be a modern human. So, with AR on their respective critical path’s, why not get a little smart on the tech, it’s current state, and what it’s going to take for it to become mainstream?

Pulling from what knowledge I’ve gained after one year on the front lines, I’ll do my best to lay out some breadcrumbs for you towards AR enlightenment. This should, at least, give you enough knowledge about AR’s core tech to be dangerous. Okay, not enough to wield on the PhD ridden battlefields of an event like Siggraph, but at least you’ll be armed with some fodder to feed your next cocktail or dinner party conversation.

The question that always jumpstarts this conversation is “When will it just be a pair of glasses?”

You’ll typically have a luddite or two, state that they hope they’re dead before we become such cyborgs. This polarizing comment should be enough to jumpstart what’s sure to be a lively debate.

Someone else will claim we will never wear a computer on our face, followed by a commentary from a more rational thinker, noting; “Are we not are already cyborgs looking at the world through the rectangles in our pockets?”

That’s when you, the voice of reason, chime in…

Obviously, these things need to get much smaller and sleeker before we get to the glasses. However, this is inevitable. Moore’s Law will take effect, don’t you fret. What matters most, and what is less inevitable, is the quality of the overall user experience. This will be dictated by the progress we see from peripheral technologies that will revolutionize how we power these devices, transfer digital data, and display virtual objects.

The soapbox is set and the floor is yours.

Desired form factor by offloading compute to the cloud

In the relatively near term, it’s going to be difficult to achieve Ray Ban form factor while keeping the necessary amount of compute and battery power onboard the glasses. But, who says we need to keep it onboard? We don’t. We can offload all compute and graphical processing power to virtualized machines in the cloud. First, in an effort to help you understand the amount of data being processed by these devices, it’s beneficial to understand some of the core tech powering an AR/VR experience.

First, we have the visuals. To render the high-fidelity images and graphics that take us into different worlds (VR), or bring those virtual worlds to us (AR), a powerful GPU (graphics processing unit) is required. These are the chips that produce all the crazy realistic 3D graphics we see today in modern video games like Call of Duty and Madden, or the CGI in Avatar and Star Wars.

Second is something called SLAM (simultaneous localization and mapping). This is a set of software algorithms that allows the device to understand the world and the user’s position in it, enabling what’s known in the biz as “inside-out” positional tracking. Meaning, the users movement and position in relation to virtual objects is tracked from sensors onboard the headset (like with Meta or HoloLens, AR), as oppose to external cameras (like with Vive and Oculus, VR).

What is essentially happening via these sensors and algorithms is that the computer is creating a 3D map of the world. Today, it’s a digital reconstruction of the surfaces and edges in your immediate vicinity (tomorrow is much more), and as you move about this digitally reconstructed world, the computer is constantly processing a mind numbing amount of data to track you and properly position the content in real-time.

Stay with me…

Again, this requires a dense CPU/GPU that is typically only found in a beefy desktop today, or a high-powered laptop. Microsoft has managed to get some of this processing power on-board their headset, but it’s graphical processing power is very limited (not able to render complex graphics, almost the graphical processing power of say, an Android phone) and there’s a reason why it’s so heavy, clunky, and tends to fall off your face.

Lastly, with our device and likely with others soon, there’s the input of the hands tracking. A depth camera in the headset is recognizing your hand gestures and allowing you to directly interact with the digital content. This dramatically heightens the suspension of disbelief and causes the user to psychologically treat the holograms like first class citizens. People often say they can “feel” something when they interact, but this is just their brain playing tricks. It’s a neat trick, no doubt, but add it to list of computational strain being put on these chips.

So, as you can see, a ton of computational power is needed to render the digital world, and even more is needed to understand the surrounding physical world. However, with breakthroughs in cloud computing, we don’t need to rely on just one piece of localized hardware. We can scale to the power of dozens, if not hundreds of GPU/CPU’s that are distributed in the cloud.

Problem solved!

Well, not quite…. We still need a way to wirelessly transmit all those graphics and data being processed in the cloud back to the device. The good news though is that these devices can now reach the glasses form factor we so desire, with the tech consisting primarily of lightweight sensors and optics.

5G networks to support the cloud

Off-loading all that computational and graphical processing power to the cloud is great, but we will still need to transmit that data wirelessly into the glasses at immense speeds, high bandwidth, and with very little latency. Enter, 5G, the next generation of wireless connectivity that will make this all possible.

How does 4G compare to 5G? Well, if 4G is like a fighter jet ripping over head at Mach1, 5G is like the Millennium Falcon, jumping into light speed. More technically speaking, we will get much faster data speeds in the form of multi gigabit throughput, meaning the amount of data that can travel wirelessly goes from one gigabit per second to ten gigabits per second. To put that in better context, where it might take you close to an hour to download a full-length movie today, it will take only a few seconds with 5G (cue the road runner music).

It also means we will have ultra-low latency, which is the time it takes one device to send a packet of data to another device. Today we have about 50 milliseconds of latency with 4G, but with 5G it will drop to 1 millisecond!

The blistering speeds of 5G aren’t only important for the connectivity of AR glasses to the cloud. It’s going to also be the foundation for the Internet of Things, powering the billions of other devices and connections that will be coming online over the coming years. And it’s all just around the corner. Singapore is successfully testing 5G networks today, the US will be rolling out tests in select markets in 2018, and full scale roll outs are slated around the world for 2020.

2020. Mark it on your calendar. It’s going to be a critical year for this digital revolution. Not only are we going to be able to start putting that previously discussed digital layer on top of the world, and look cool while doing it, but we’ll also be experiencing breakthroughs in optical technology that will make it difficult to distinguish the real from the virtual.

I’m talking about holograms that look so real they’ll just blend in with the rest of reality, as if they are truly there in the world. This is going to be possible thanks to photo realistic optics in the form of light field technology.

This is where things could get weird, and the lines between what is real and what is not begin to blur. Monsters Inc. becomes real for your children, holographic C-3PO parades around your living room armed with clever quips, and Justin Bieber’s next performance is live in your living room.

This assumes his relevance in 10/15 years is preserved, and at this rate, I’m not worried. Kid’s been on fire lately. Amazing what a good roasting can do for a person.

Realistic optics

In a cloud enabled world, in which computing and battery power are no longer an issue, we can now crank the dial on the visual quality and realism of the images being produced via what is known as a light field display, a technology that will require an immense amount of computing power in the cloud to work.

This is a serious rabbit hole, rife with complex optical science, but I’ll try to explain this concept in the most simplistic way possible.

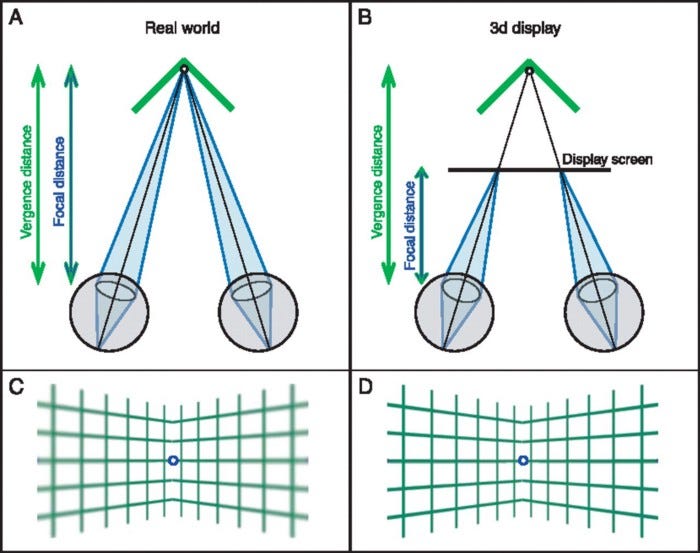

With today’s AR/VR displays, we trick the brain into thinking it’s seeing something 3D with stereoscopic displays. Two images are produced on a screen, and your left and right eye see a slightly different scene. As your brain processes these two scenes, the disparities between the two give the illusion of depth. However, it’s just that, an illusion. Both your eyes and your brain can tell that something isn’t quite right. This is because our eyes depend on another critical depth cue in the real world: focus.

Today’s displays don’t provide focus cues at all. While they are trying to convince your eyes that they should be focusing on something in the distance, the reality is that your eyes are just looking at a screen a few inches away. Thus, the entire image is always in focus, and your brain gets confused. Industry white coats call this the “vergence-accomodation conflict”.

Bear with me here as I try to explain… essentially, your eyes do two things when they look at an object.

First, they point in a direction. As they do so, they are either converging or diverging. If an object is close, your eyes must rotate inwards (converge) to keep it within your field of view. If something is farther away, your eyes rotate outward (diverge) until gazing at an infinite distance, at which point your pupil’s gaze is parallel (ie: looking straight ahead off at the horizon). So they either converge or diverge, hence, “vergence”.

Second, lenses in your eyes focus on, or accommodate to, an object, aka: accommodation. In a real-world setting, vergence and accommodation talk to each other, working hand in hand to assess the actual distance of an object, making it easy for my brain to understand that the Golden Gate Bridge is currently a few miles away from my current vantage point.

Now, back to putting a screen (or in Meta’s case, a visor) in front of your face. In summation, what is happening here is that your eyes are always “accommodating” to the screen/visor, but they are “converging” to a perceived distance that appears farther off. Fortunately, our brains can figure this out, decoupling vergence from accommodation to compensate, but our eyes are not very happy about it, and eye fatigue and strain ensue.

Without getting too technical, light field displays (LFD) can solve this problem. Instead of flat images on a screen, LFD’s produce 3D patterns of light rays that mimic how light bounces off objects in the real world. In other words, they can project light into our eye in a way that mimics the natural way we see light, and the world.

In doing this, as the LFD is beaming light directly into the eye, it can create multiple planes to produce the effect of real depth or distance. This means that an image is being created not just once, but created from every conceivable angle, and at numerous levels (or planes) of depth. In turn, this has a huge multiplier effect on the amount of data needed to produce each image, at each plane, from every angle, making the numerous GPU/CPU’s we can scale to in the cloud critical for this type of display technology.

This the kind of tech that Magic Leap is trying to produce, and this need for intense amounts of compute power is the very reason they are having trouble getting it to market.

Whew, if you managed to get through and understand all of that, thumbs up. I’m exhausted just writing it… If you really want to geek out on light fields, or if some camera tech PhD in your office scoffs at my description (likely), just check out Wikipedia. It’s all there.

Just around the corner…

So there you have it, a comprehensive view of AR’s core tech and it’s roadmap. Mic drop, and leave the party.

Okay, maybe a rabbit hole such as this is unlikely to present itself in your next casual social gathering, but perhaps it’ll be useful in other ways, such as assessing where you should be placing your professional bets. And I don’t just mean bets specifically within AR, the impact of this stuff spans almost every industry. The dominoes have been set, and the first that really need’s to fall to set it all in motion is that of 5G networks. The headsets are here, the cloud exists, light fields are real, now we just need to give those data speeds a step function boost.

Fortunately, 5G is very close. I mentioned that it’s being tested in select US markets next year, and is being rolled out in Singapore as we speak. Another telling sign is AT&T’s recent $1.2 billion purchase of a nondescript little company called Straight Path Communications, who owns several wireless spectrum licenses (eg: airwaves) required for 5G connectivity. Verizon made a similar purchase earlier this year.

The last piece of the “AR adoption” equation that we’ve yet to discuss is around software and content. With all this technology in place, we’ll have a content pipeline directly to the eye that will allow us to turn the world into a spatial computer. But the world’s a big place… who is going to make all that 3D content? Where will it come from?

In the enterprise, it already exists, it just needs to be mined and refined. For the prosumer/consumer, the 3D tools exist to create it at scale, it’s more a matter of how these tools will be democratized, and by whom. You don’t have to look much farther than Hollywood to figure it out…

Sounds like a great topic for another post.

Until next time.